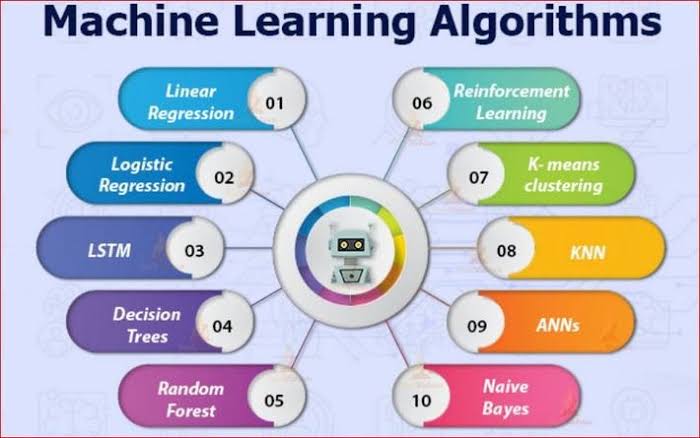

In the evolving landscape of artificial intelligence, machine learning algorithms serve as the core machinery behind automation and smart decision-making

As of June 2025, machine learning is at the heart of innovations powering industries such as healthcare, finance, robotics, e-commerce, education, and more. For aspiring professionals and experts in artificial intelligence, mastering key algorithms is essential for building intelligent systems and drawing meaningful insights from data. The following are the top ten machine learning algorithms to learn and understand thoroughly to stay relevant in today's fast-evolving tech landscape.

1. Linear regression

Linear regression is the most fundamental supervised learning algorithm used to predict continuous numerical values based on the linear relationship between independent and dependent variables. It remains a valuable starting point for beginners and a practical method for real-world applications such as forecasting, cost estimation, and trend analysis. Despite the rise of complex models, linear regression is still widely used for its simplicity, efficiency, and interpretability.

2. Logistic regression

Logistic regression is commonly applied to classification problems where the outcome is binary, such as spam vs. not spam or accepted vs. rejected. It uses the logistic function to model probabilities and is especially useful in situations where understanding the effect of predictor variables on a binary outcome is crucial. In 2025, logistic regression continues to be valuable in areas like credit scoring, healthcare diagnostics, and customer churn prediction.

3. Decision trees

Decision trees mimic human decision-making by using a tree-like structure of conditions to classify data or predict outcomes. Each node represents a decision rule, and each branch represents the result of the rule. They are popular due to their ease of interpretation and ability to handle both categorical and numerical data. Decision trees are used in fraud detection, loan approval systems, and diagnostic tools, although they can overfit if not pruned correctly.

4. Random forest

Random forest is an ensemble technique that builds multiple decision trees and aggregates their results to improve accuracy and stability. It reduces overfitting by averaging the predictions of many trees and is effective in both regression and classification problems. Random forest is widely used in feature selection, risk assessment, and large-scale data analysis because of its robustness and versatility.

5. Support vector machines (SVM)

SVM is a powerful classification algorithm that identifies the best hyperplane to separate data points into classes. With the use of kernel tricks, SVM can handle complex, nonlinear relationships in high-dimensional spaces. Though computationally intensive, SVMs are highly accurate in fields like handwriting recognition, bioinformatics, and image classification, making them a go-to option where precision matters.

6. K-nearest neighbors (KNN)

KNN is a non-parametric, lazy learning algorithm that classifies a new data point based on the majority class of its nearest neighbors. It’s simple to implement and effective for tasks like recommendation systems and pattern recognition. However, KNN can be slow with large datasets and requires careful tuning of parameters such as the number of neighbors (K) and the distance metric used.

7. Naive Bayes

Naive Bayes is a family of probabilistic classifiers based on Bayes’ theorem, assuming independence among features. Despite this simplifying assumption, it often performs surprisingly well, especially in text classification, spam detection, and sentiment analysis. In 2025, Naive Bayes remains relevant due to its speed, simplicity, and scalability in processing large amounts of textual or categorical data.

8. Gradient boosting machines (GBM)

Gradient boosting builds models in a sequential manner where each new model corrects errors made by the previous ones. Tools like XGBoost, LightGBM, and CatBoost have made gradient boosting popular in competitions and commercial projects. It excels in handling structured data and provides high accuracy, making it ideal for applications in fintech, healthcare analytics, and predictive modeling. Tuning GBMs effectively requires some experience, but the results are often superior to traditional models.

9. K-means clustering

K-means is a widely used unsupervised learning algorithm that partitions data into K distinct clusters based on feature similarity. It is useful in market segmentation, anomaly detection, and customer grouping. Though K-means assumes spherical cluster shapes and can be sensitive to outliers, it is fast and effective for exploratory data analysis when combined with visualizations or dimensionality reduction techniques.

10. Deep learning with neural networks

Deep learning models, inspired by the structure of the human brain, use neural networks with multiple layers to process complex data. They are particularly powerful in domains such as natural language processing, computer vision, and speech recognition. Frameworks like TensorFlow and PyTorch have made it easier to build and deploy neural networks at scale. Mastering concepts like convolutional layers, recurrent units, activation functions, and backpropagation is crucial for anyone aiming to build cutting-edge AI systems in 2025.